In this article, we shall study about data preprocessing, a fundamental step in data mining. Data preprocessing in data mining involves transforming and refining raw data into a clean and structured format ready for analysis and modeling. Additionally, we cannot work with raw data, which is also an important step of the data mining process. With this data, we should check the quality before applying any machine learning or data mining algorithm. On the one hand, preprocessing mainly ensures that data is of quality. It can check the data quality to ensure it is accurate, complete, consistent, timely, valid, and interpretable. The core tasks include data cleaning, integration, reduction, and transformation.

Data Preprocessing in Data Mining: An Overview

Data preprocessing transforms raw data into a usable format. It addresses inconsistencies, errors, and incomplete information in real-world data, making it suitable for analysis. This step is vital for successful data mining and machine learning, as it improves the speed and accuracy of algorithms. It standardizes data formats, resolves human errors, and eliminates duplicates.

Databases consist of data points, called samples or records, described by features or attributes. Effective data preprocessing in data mining is needed to build accurate models. It handles issues like mismatched formats when combining data from different sources. By removing inconsistencies and missing values, preprocessing ensures a complete, accurate dataset.

Get curriculum highlights, career paths, industry insights and accelerate your data science journey.

Download brochure

Purpose of Data Preprocessing in Data Mining

Data preprocessing is fundamentally aimed at making the raw data required for the analysis ready for consumption by machine learning models, and it’s important that the data is of high quality so the analysis can honestly proceed. Below are several key objectives and benefits of data preprocessing in data mining:

Ensuring Data Quality

Any data-based analysis strongly depends on data quality. Data preprocessing entails identifying and correcting errors of accuracy, completeness, consistency, and so on, as well as timeliness. By systematically cleaning the data, organizations can:

- Identify and correct shortcomings that could easily mislead your analyses.

- In other words, fill in missing values so you have a complete dataset.

- Eliminate duplicates that could distort results or otherwise lead to biased conclusions.

- Working with multiple sources of datasets means that you need to normalize data formats to ensure consistency in those datasets.

Enhancing Model Performance

For machine learning models to thrive, your data needs to be good. When applying machine learning algorithms, well-preprocessed data can result in big performance improvements. This is achieved by:

- Reducing noise and irrelevant information that can make the model overfit, learn the noise and not the signal.

- This allows us to simplify the complex datasets and make the algorithms simpler so they can learn the pattern in an easy way without being confused by unnecessary features.

- It makes sure that the input data is compliant with the assumptions of the algorithm being used so the model can be more accurate and reliable.

Facilitating Data Analysis

Data preprocessing in data mining refers to performing some analyses on raw data to give it a more structured format that facilitates analysis and makes it more efficient. This enables data scientists and analysts to:

- Very quickly visualize data to spot trends/patterns.

- Perform data exploration (EDA) and develop hypotheses from observed trends.

- You can have a bit more confidence in the data being clean or the data being the right way to model it.

Reducing Computational Costs

However, processing large datasets can be very computationally expensive. Data preprocessing reduces the size of the dataset while retaining essential information, thus:

- There’s a decreasing dependence on time and resources for data analysis and model training.

- It helps speed up the iteration during the model development process and allows data scientists to concentrate on refining the models instead of wasting time collecting data for preparation.

Improving Interpretability

Clean, well-structured data helps to make analysis results more understandable. Such data insights are crucial for the stakeholders that rely on them in making decisions. By preprocessing data:

- Visualizations can be clearer, more meaningful and better at communicating what is found.

- It allows decision makers to understand underlying data trends and the relationship with business outcomes thus informing their decision making.

Making Better Insights and Predictions Possible

Data preprocessing in data mining tries to prepare data for extracting actionable insights. By preparing data appropriately:

- It allows organizations to find hidden patterns with which to inform strategic decisions.

- That can create better planning and resource allocation because the predictive models are more accurate.

Data Regulations Compliance

In today’s data world, they must comply with different data protection regulations (such as GDPR). Data preprocessing plays a crucial role in ensuring that data is handled ethically and legally by:

- Making sensitive information anonymous to protect individual privacy.

- Attaching consent to data collection and processing in order to lower the risk of facing legal penalties.

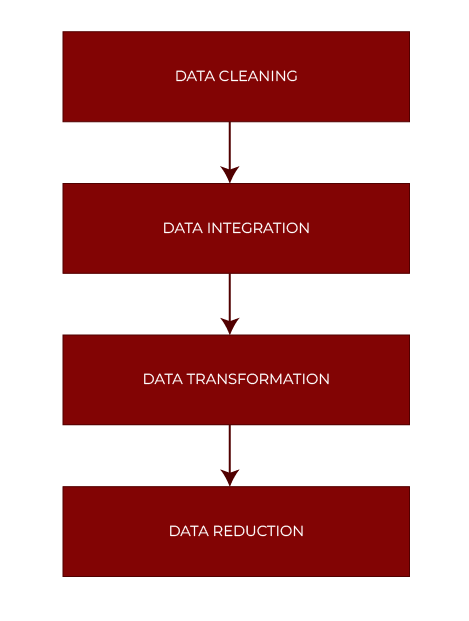

Stages of Data Preprocessing in Data Mining

Data preprocessing consists of many steps that will make the data usable and ensure high quality for analysis. The main stages include:

Data Cleaning

Data cleansing or data cleaning is the first and the most important step in preprocessing. It relates to this stage, identifying and removing errors, cleaning out duplicate records, handling missing values, and producing clean data. The dataset should be accurate and, hence, complete to enable efficient analysis and modeling, as this is the goal. Common techniques used in data cleaning include:

- Filling Missing Values: Missing data can be handled by methods like mean substitution, regression imputation, or using predefined constants.

- Outlier Detection and Removal: Correct analytical analysis of outliers is necessary.

- Noise Reduction: A good way to smooth out noise in the data is techniques like binning or clustering.

Data Integration

Data integration is the process of combining data from multiple datasets into one dataset. Data is collected from various systems, often resulting in integration. However, this may cause inconsistencies and redundancy that must be resolved to get an accurate analysis. Techniques used in data integration include:

- Data Consolidation: Merging datasets into a physical location from which they can be more easily accessed.

- Data Virtualization: Without physical consolidation, users can take a real-time view of data from disparate sources.

- Data Propagation: Writing data from one location while copying to another location, balancing those two writes to ensure consistency.

Data Transformation

Data transformation means converting the data to a form suitable for analysis. This stage is critical to ensuring that our data can be processed quickly with algorithms. Transformation techniques may include:

- Normalization: It is important, especially for distance based algorithms, to adjust the values of data to a common range.

- Aggregation: Reducing detailed data into a concise form, such as data cubes for multidimensional analysis.

- Discretization: Reducing continuous data into categories for analysis.

Data Reduction

Data reduction will reduce the dataset while retaining needed information. Especially for processing large volumes of data. Techniques for data reduction include:

- Dimensionality Reduction: Reductions in the number of feature dataset that does not disturb variability can be achieved by Techniques such as Principal Component Analysis (PCA).

- Feature Selection: Finding the optimal features to analyze, either identifying or removing them.

- Numerosity Reduction: The use of e.g. techniques for replacing detailed data with smaller, more manageable representations.

Use of Data Preprocessing in Data Mining

We noted earlier that this is one of the reasons data preprocessing is important in the early stages of the development of machine learning and AI applications. In AI, data is prepared in order to optimize how the data is cleaned, transformed, and structured to assist in a new model with less computing power used. A good data preprocessing step will go a long way in developing a set of components or tools to create the preconditions for quickly prototyping a set of ideas or running experiments to improve business processes or customer satisfaction. Furthermore, preprocessing can help the way in which data is arranged for a recommendation engine by improving the age ranges of customers used to categorize.

Additionally, it can increase the process of developing and expanding data and help the business in more manageable BI. For example, customers in small size, category or regions may behave differently in different regions. If BI teams could incorporate such finding into BI dashboards, this backend processing of the data into the appropriate formats will provide for handling the data the way BI people will want to.

Data preprocessing is a subprocess of web mining in customer relationship management (CRM), and it is a broad concept. We often have to undergo pre-processing of the Web usage logs to get to meaningful data sets known as user transactions, which are actually groups of URL references. Sometimes, it will store sessions to help identify the user, and the websites they request, and in what order and when. Once extracted from data, these are more meaningful, e.g., used for consumer analysis, product promotion, or customisation.

Also read: Clustering in Data Mining

Conclusion

Data preprocessing is an important step in the data mining process, and data preprocessing is important to pass the data in a ready form for further analysis. In this article, I share a comprehensive guide to data preprocessing techniques, including data cleaning, integration, reduction, and transformation. The article walks through some practical examples and code snippets to help readers grasp the core concepts and techniques and teach them what steps to take to apply these techniques to their own data mining projects. If you are a beginner or an experienced data miner, you will get some useful information and resources in this article to achieve higher quality from your data. If you want to learn about this in more detail and get a professional certificate, consider pursuing Hero Vired’s Accelerator Program in Business Analytics and Data Science, which is offered in collaboration with edX and Harvard University.

FAQs

Data preprocessing is a step of turning raw data into a form we can understand. Moreover, it is also an important step in data mining because we can't work with raw data. Before applying machine learning or data mining algorithms we should check the quality of the data.

Data integration in data mining is a technique that allows data from multiple different sources to be integrated coherently into a unified view. The data sources that make up these data may include several data cubes, databases or flat files.

Data preprocessing refers to Data wrangling, data transformation, data reduction, feature selection and feature scaling which are all types of approaches teams use to reorganize raw data to a format that is usable for some algorithms.

Data mining tools are powerful statistical, mathematical, and analytics elements tools that primarily sort through large sets of data in an attempt to identify trends, patterns and relationships that can be used to make informed decisions and plan.

Updated on February 22, 2025