Operating systems have become a necessity when managing simultaneously running programs in today’s world. Nowadays multicore processors and distributed systems have become very common and there is a need to utilise them effectively, or in other words, have efficient resource management.

One of the most fundamental challenges faced in such environments is the Critical Section Problem. This problem deals with ensuring that when one process is accessing a shared resource, no other process can access that resource simultaneously, thus avoiding inconsistencies and unpredictable behaviours.

In this article, we will understand the critical section problem in OS, along with its various requirements, solutions, etc. We will also examine the advantages and disadvantages of the critical section problem in OS and the different applications in the real world.

What is Concurrency in OS?

In operating systems, concurrency is the ability of an OS to manage several tasks or processes concurrently, improving responsiveness and efficiency. As distributed systems and multi-core processors become more common, effective concurrency management is essential to utilising hardware to its maximum potential. Resource sharing brought on by concurrency leads to problems including resource scarcity, race situations, deadlocks, and starvation. While concurrency guarantees system responsiveness and efficiency, it necessitates rigorous control over shared resource access to prevent two processes from being in the critical area at the same time.

Types of Concurrency Issues:

- Race condition: This issue occurs when multiple processes access and modify shared data without proper synchronisation, leading to unpredictable results.

- Deadlocks: These occur when processes create a circular dependency by waiting endlessly for resources that are held by one another.

- Starvation: It occurs when a process waits indefinitely to enter the critical section because other processes are repeatedly granted access.

- Livelock: This is a state where processes constantly alter their states in response to one another without making any progress.

Also Read: Deadlock in OS

Before we move on to what is the critical section problem in OS, we need to understand what exactly is a critical section.

POSTGRADUATE PROGRAM IN

Multi Cloud Architecture & DevOps

Master cloud architecture, DevOps practices, and automation to build scalable, resilient systems.

What is the Critical Section?

A critical section is an important part of a program in which the shared resources such as variables, memory files, etc., are accessed or modified. In other words, A critical section is a set of instructions, statements, or code segments that must be executed atomically, like accessing a resource like a file, variables, I/O port, global data, etc. The execution of the key section concurrently by two or more processes may result in inconsistent data or unexpected actions.

For example, consider two processes attempting to edit a common variable- myVar. If there is no control, two processes might read and update myVar with the same value, storing inconsistent results. We will understand more similar examples in detail.

Phases of Process Execution

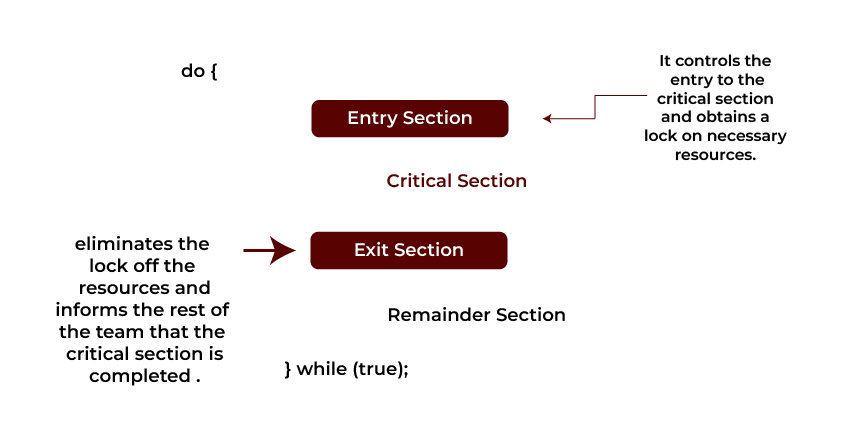

The following are the phases of process execution in the critical section of OS:

- Entry Section: It prepares the process to enter the critical section, checking for locks or semaphores to ensure mutual exclusion.

- Critical Section: This is the actual code section where the process interacts with shared resources, ensuring that only one process is executed in this section at any time.

- Exit Section: After the crucial part’s operations are finished, the exit section releases locks or resources so that other processes can use the shared resources.

- Remainder Section: It performs non-essential tasks that don’t require synchronisation and can be completed concurrently with other processes.

Also Read: Process Synchronization in OS

Here is the pseudocode to implement the critical section:

do {

flag = 1;

while(flag); // (entry section)

// critical section

if (!flag)

// remainder section

} while(true);

What is the Critical Section Problem in OS?

A critical section can be simply defined as a part of a program where computing resources such as variables, memory, and files are used. The key issue with a critical section is when multiple threads or processes try to access their respective critical sections at the same time resulting in a race condition. In other words, the outcome is dependent on the order and scheduling of the processes.

What is a Race Condition?

A race condition describes the state in which the behaviour of the system is caused by the order in which processes or threads come into execution. Race conditions can be avoided by proper handling of critical sections where no two processes try to operate on the same resource at one time.

Let’s understand the problem of the critical section in OS with the help of a simple real-world example:

Imagine two persons accessing an online bank account to withdraw money:

- P1 checks the balance, sees $500, and tries to withdraw $250.

- Simultaneously, P2 checks the balance, sees $500, and tries to withdraw $400.

Here, the balance might be updated inaccurately if both users withdraw at the same time without synchronisation, which would leave the bank in an inconsistent state.

Critical Section Requirements

To address the critical section problem effectively in the operating system, the below requirements must be fulfilled:

- Mutual Exclusion

This is the core requirement of the critical section problem. It guarantees that critical sections can be entered by only one process at a given time. In cases where one process is currently accessing the shared resource, other processes intending to enter the critical section must be put on hold. This eliminates occurrences of race conditions and maintains data integrity.

2. Progress

This creates a scenario whereby if no process is already present in the critical section and processes are standing by in the queue to get access, at least one of the processes ahead in the queue will eventually go into the critical section. With this, processes are not able to wait forever and access the critical section when no other process is currently using it.

3. Bounded Waiting

Bounded waiting guarantees that every process is allowed to enter the critical section in a reasonable time interval. Otherwise, it will stand to waste time for no reason. Every process is assured a fair chance to enter the critical section at most in a reasonable period. This condition prohibits starvation states where some processes are never allowed into the critical section because of the constant traffic of other processes bypassing the queues.

4. No Assumptions About Relative Speed or Number of CPUs

Most importantly, the solution to the critical section problem must not be dependent on the speed of the processes or the number of CPUs present in the system. The solution should be valid for a uniprocessor as well as a multiprocessor environment. No assumptions should be made concerning the speed of processing.

Now, we will understand the solutions to the critical section problem in OS.

82.9%

of professionals don't believe their degree can help them get ahead at work.

Solutions to Critical Section Problems in OS

Process synchronisation plays an essential part in resolving the critical section problem in the OS. Various solutions have been proposed to solve the critical section, and here are the common approaches:

Software Solutions

Software-based solutions rely on algorithms to coordinate processes. Below are two classical algorithms: Dekker’s and Peterson’s algorithms.

- Dekker’s algorithm

Dekker’s Algorithm was regarded as one of the earliest efficient methods to guarantee mutual exclusion. It employs the use of flags and a turn variable for synchronisation on the critical section of code.

Pseudocode:

Process i:

flag[i] = true

while flag[j]:

if turn != i:

flag[i] = false

wait until turn == i

flag[i] = true

critical_section()

turn = j

flag[i] = false

Here,

- Each process sets its flag to true when it wants to enter the critical section.

- If the other process’s flag is also true, the process yields its turn and waits until it’s allowed to proceed.

2. Peterson’s algorithm

Peterson’s Algorithm is less complicated and is preferred in the two-process case. It manages the solution with two flags and a turn variable. It is simpler than Dekker’s algorithm and ensures mutual exclusion, progress, and bounded waiting.

Pseudocode:

flag[i] = true

turn = j

while flag[j] and turn == j:

wait

critical_section()

flag[i] = false

Here,

- Each process signals its intention to enter the critical section by setting its flag to true.

- The turn variable gives priority to the other process, preventing both from entering simultaneously.

Hardware Solutions

Hardware solutions were also provided to handle the critical section to support the mutual exclusion but these were more complex in implementation.

- Test-and-Set Lock

It is a hardware instruction that works atomically and cannot be interrupted by other processes. It checks and sets a lock in one step. Let’s see the pseudocode for the Test-and-Set lock approach:

Pseudocode:

while TestAndSet(lock):

wait

critical_section()

lock = false

2. Compare-and-Swap

It is another solution to handle the mutual exclusion in OS, which checks if a memory location has a certain value, and if so, it swaps the value atomically. Let’s see the pseudocode for the compare-and-swap:

Pseudocode:

while CompareAndSwap(lock, 0, 1) == false:

wait

critical_section()

lock = 0

Synchronisation Solutions

Synchronisation primitives, such as mutexes and semaphores, abstract away the complexities of hardware instructions and software algorithms.

- Mutex

A mutex (a contraction of mutual exclusion) refers to a locking system that prevents interference with a critical section code segment more than once by allowing only a single process at any one instance to access it.

Pseudocode:

wait (mutex); //locks

…

Critical Section

…

signal (mutex); //unlocks

Mutex is used in solving the famous producer-consumer problem in OS and is simple to use. Mutex helps in preventing the race conditions but may lead to deadlocks if not handled carefully.

2. Semaphores

A semaphore is regarded as a more sophisticated means of synchronisation. For instance, it can be a counting semaphore whereby the critical section can only fully be accessed by a specified number of user processes concurrently.

Pseudocode:

Semaphore s = 1 // Binary semaphore

wait(s)

critical_section() // critical section

signal(s)

Semaphores are flexible which allows for more than one process in the critical section.

It also helps in managing resource allocation. Semaphores are complex to use compared to mutexes and may also lead to deadlocks.

Classical Problems in Synchronisation

Producer-Consumer Problem

In this case study, there exist two processes known as the producer and consumer which use the same buffer of a constant size. The buffer is used by the producer to store items and it is used by the consumer to take out items. The issue comes when the buffer becomes full i.e., the producer shall remain idle or when the buffer is empty (the consumer will be idle).

Pseudocode for Solution for Producer:

do {

//Produce an item

wait(empty);

wait(mutex);

//Place in the buffer

signal(mutex);

signal(full);

} while (true)

Pseudocode for Solution for Consumer:

do {

wait(full);

wait(mutex);

//Consume item from buffer

signal(mutex);

signal(empty);

} while (true)

Dining Philosophers Problem

There are five philosophers positioned around a table with five chopsticks that are now situated between various pairs of philosophers. Each philosopher has to take two sticks to be able to eat but between any two philosophers, there lies only one chopstick. The problem is to avoid the deadlock where each of the philosophers takes the first available wooden chopstick although their second stick remains out of reach.

Pseudocode:

process P[i]

while (true) {

chopstick[id].acquire(); // Pick up left chopstick

chopstick[(id + 1) % 5].acquire(); // Pick up right chopstick

// Eat

chopstick[id].release(); // Put down left chopstick

chopstick[(id + 1) % 5].release(); // Put down right chopstick

}

Real-World Applications of the Critical Section Problem

In software engineering applications, it is essential to comprehend and handle the crucial section problem. Here are a few real-world applications for the same:

- Database management systems (DBMS): Often complex systems are used that input and output data from a common base and use it at the same time. The appropriate implementation of certain synchronisation primitives such as locks & Transactions, guarantees that such operations can be carried out while avoiding any data corruption.

- Operating Systems (OS): Operating systems are able to use any number of synchronisation mechanisms to avoid contention for resources. The critical section has more forms and may be found in different subsystems such as memory, file, and device drivers.

- Real-time systems: In real-time systems, timing, and resource allocation are important factors. The use of degrees of strategies for resolving the critical-section problem guarantees that higher-priority executing tasks do not experience unnecessary delays.

- Web servers: Web servers address many client requests at the same time. Timely management of critical sections is essential to ensure the correctness of session states and access to shared resources.

- Multithreading: In multi-threaded applications, the critical sections are used to manage common data structures shared between threads, such as lists, queues, or caches. It is very important to employ solutions to the critical section problem in order to preserve the consistency of the data collected.

- Embedded Systems: It is important for embedded systems to manage shared resources due to the fact that this is important for both the operation and the performance of the system. Synchronisation mechanisms are applied to manage access to the devices and/or shared memory.

Also Read: Multithreading in Java

Real-World Example of Critical Section Problem in C++

Suppose, in a bank account where multiple threads (users) are doing multiple transactions, and updating a shared account balance. If multiple employees update the balance at the same time, race conditions could occur, leading to inconsistent results. This is a typical critical section problem.

To deal with the problem of modifying the savings account balance with multiple users, a mutex will be used which allows only one thread to modify the balance at any one particular time.

Code:

#include <iostream>

#include <thread>

#include <mutex>

// Shared resource (critical section variable)

int account_balance = 0;

// Mutex to control access to the critical section

std::mutex balance_mutex;

// Function to increment the A/C balance

void depositCash(int amount, int t_no) {

for (int i = 0; i < t_no; ++i) {

// Entry section - attempting to enter the critical section

balance_mutex.lock(); // Lock the mutex to ensure exclusive access to the critical section

// Critical section - modifying the shared resource

account_balance = account_balance + amount;

std::cout << "Deposited amount: $" << amount << ", Updated balance: $" << account_balance << std::endl;

// Exit section - leaving the critical section

balance_mutex.unlock(); // Unlock the mutex

// Remainder section

}

}

int main() {

// Two bank users (threads) updating the account balance

std::thread p1(depositCash, 300, 5); // p1 deposits $100, 10 times

std::thread p2(depositCash, 30, 5); // p2 deposits $50, 10 times

// Wait for both threads to complete their transactions

p1.join();

p2.join();

std::cout << "Final A/C balance: $" << account_balance << std::endl;

return 0;

}

Output:

Deposited amount: $300, Updated balance: $300

Deposited amount: $300, Updated balance: $600

Deposited amount: $300, Updated balance: $900

Deposited amount: $300, Updated balance: $1200

Deposited amount: $300, Updated balance: $1500

Deposited amount: $30, Updated balance: $1530

Deposited amount: $30, Updated balance: $1560

Deposited amount: $30, Updated balance: $1590

Deposited amount: $30, Updated balance: $1620

Deposited amount: $30, Updated balance: $1650

Final A/C balance: $1650

Explanation:

In this example,

- We have created a shared resource account_balance (critical section) accessed by multiple threads.

- A mutex (balance_mutex) is created to ensure mutual exclusion, allowing only one thread to modify account_balance at a time, preventing race conditions.

- Two threads (p1 and p2) execute depositCash, where one deposits $300 and the other $30, each running 5 times.

- The mutex lock/unlock mechanism wraps the critical section where the balance is updated, ensuring consistent and sequential updates.

- After both threads are complete, the final account balance is displayed

Also Read: OS Interview Questions

Advantages and Disadvantages of the Critical Section Problem in OS

Advantages

- Data Integrity and Consistency: The appropriate solution to the critical section problem protects the integrity of shared variables, files, or other resources. In the absence of synchronisation, closely interacting processes could generate data inconsistency, especially due to multiple reads/writes occurring simultaneously.

- Prevention of Race Conditions: Through the resolution of the critical section problem it becomes possible to do away with race conditions which occur when the final output of processes varies depending on the order in which processes are run. Unpredictable outcomes may result from race conditions. Race conditions are avoided using techniques such as mutex and semaphore, as these ensure that a critical section can be accessed by one process at a time only.

- Compatibility with Legacy Code: A variety of programming languages and operating systems support critical sections, a well-known synchronisation technique. They are therefore compatible with current systems and codebases.

- Improved System Stability: The system becomes stable as a result of the implementation of mechanisms that ensure mutual exclusion and proper synchronisation of multiple processes in the system. Critical sections are handled in such a manner that data loss and deadlocks occur less often, thus improving the whole OS’s reliability.

- Prevention of Deadlocks and Starvation: Algorithms like the Banker’s Algorithm can further minimise deadlocks and starvation by regulating how resources are distributed within the system. Effective allocation of system resources is made in such a manner that processes will not be starved of resources waiting for an extended period.

Disadvantages

- Increased Complexity: Solutions to the critical section problem involve additional layers that add to the complexity of system design as well as development. The intricacies involved in the management of locks, semaphores, and other synchronisation mechanisms are numerous and require details. Even debugging concurrency problems may be a difficult and extensive process.

- Performance Overhead: Synchronisation techniques, such as the ones mentioned in the previous section, do incur performance costs. Constant locking and unlocking of particular resources, lots of context switching between processes and the need for mutual exclusion are factors that can contribute to poor performance of the system.

- Deadlocks: Despite mechanisms designed to avoid them, improperly implemented solutions to the critical section problem can lead to deadlocks, where two or more processes are stuck waiting for each other to release resources. Once in a deadlock, it is a stalemate for processes that need to be released, otherwise, the whole system will be in an infinite wait state, leading to system failure.

- Contention Issues: A situation can occur where multiple processes or threads need to perform operations within the same critical section. If the same section is accessed by different processes or threads many times; ultimately, the same section might suffer the due stress and performance depreciations.

- Risk of Livelock: Only in some cases processes may reach the so-called livelock state where all the processes are forever changing their state and are always attempting to perform some operation, however, none are successful at this point.

Conclusion

To sum up, we have seen every detail connected with the execution of the process of the critical section problem in this article. We saw several solutions to the critical section problem and various requirements. The critical section in OS is used to solve the race condition occurring due to process synchronisation.

Addressing the Critical Section Problem is essential to avoid interference among concurrent processes in an operating system (OS). These mechanisms include algorithms such as Peterson’s and Dekker’s for software and hardware solutions as well as synchronisation mechanisms such as semaphores and mutexes for the same problems. Get a full walkthrough of operating systems through the Certificate Program in Application Development powered by Hero Vired.

What is the critical section problem in the OS?

What are the approaches or solutions to handle the critical section problem in OS?

- Among the classic solutions belong Peterson’s Algorithm and Dekker’s Algorithm which offer mutual exclusion to processes in a two-process system through software means.

- Hardware mechanisms such as mutexes and software abstractions such as monitors provide similar mechanisms by coercing the system to allow resource allocation to one process at any given time.

- Semaphores are frequently used, which provide a control signal to adjust several processes vying for the critical section.

What is a race condition in OS?

What is Banker’s algorithm in OS?

What is semaphore in an OS, and how is it implemented?

- Binary semaphores: It functions similarly to mutex locks.

- Counting semaphores: It controls access to several instances of a resource.

Updated on January 16, 2025