Data warehousing plays a crucial role in managing large quantities of data to ensure business decision-making is done based on adequate data. As data warehouses are increasingly important in today’s data-driven companies, they need experts in data modelling, ETL processes, database management, etc. Welcome to this article; in this article, you will find frequently asked data warehouse interview questions for beginners and those who have already built up some experience.

What is a Data Warehouse?

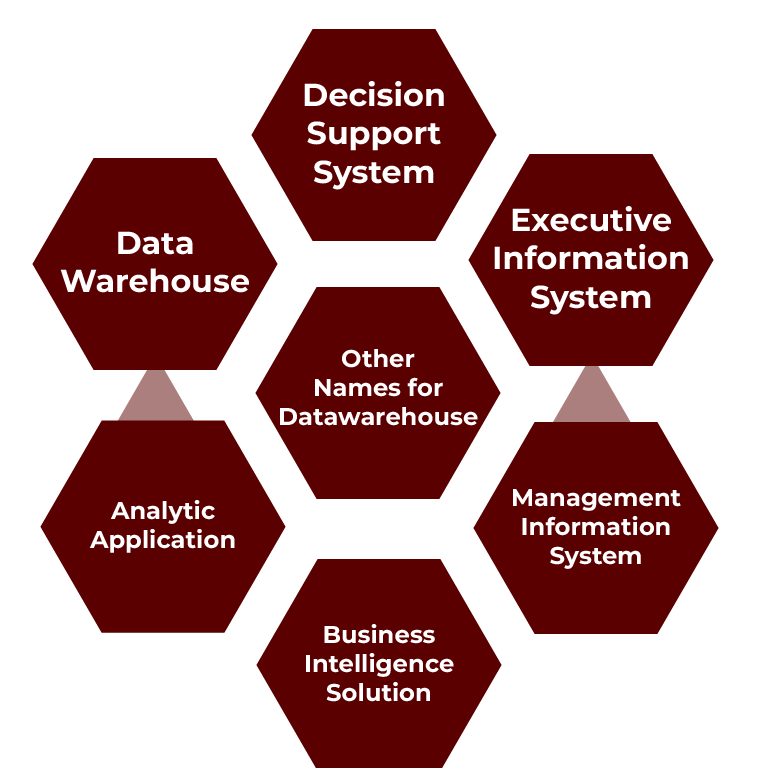

A data warehouse is a central repository located in which large volumes of structured data coming from several sources will be stored and from which they will be easily queryable, reportable, and analyzable. This integrates averaged information from various databases and systems and standardizes it to serve as a more useful tool for decision-making with reflections on key data. Data warehouses fundamentally differ from traditional enterprise databases, such as online transaction processing (OLTP) systems designed for processing transactions and not analytical queries.

POSTGRADUATE PROGRAM IN

Multi Cloud Architecture & DevOps

Master cloud architecture, DevOps practices, and automation to build scalable, resilient systems.

Data Warehouse Interview Question for Freshers

Let’s see some data warehouse interview questions for freshers.

1. What is an aggregate table in a Data warehouse?

An aggregate table is a table which contains data stored in an existing OLAP warehouse and groups it to a certain level of dimensions. It always takes less time and effort to query data from the aggregated table rather than from a table with more records than the aggregated table.

2. What do you understand about metadata in a data warehouse?

Data about the data is called metadata. The metadata includes fixed and limited widths, the number of columns used, data types, and fields’ ordering.

3. Define ER diagram in data warehousing.

An ER (Entity-Relationship) diagram is a scheme that depicts the mutual interactions between several entities in a database. In the diagram, all tables are depicted, and all the relations between them are shown also.

4. What do you understand by Star Schema?

Star Schema is in charge of managing the table in a way that results can be retrieved conveniently in the data warehouse platform.

5. What is the difference between agglomerative clustering and divisive hierarchical clustering?

Clusters are read from bottom to top in the agglomerative hierarchical clustering methods. Each object’s cluster builds its cluster, combining into a giant cluster. It merges continuously until we have one big cluster. Divisive hierarchical clustering, however, employs a top-to-bottom approach too. Here, the cluster is divided. The clusters are divided until they have only one object.

6. What are the testing phases in a project?

The stages of an ETL test are five — Identification of requirements and data sources, Data acquisition, Executing the business logic, Building and publishing data and Reporting.

7. What do you understand by data mart?

Data mart includes a subset of organization-wide data. This subset of data is insightful to specific groups in an organization. In simple words, the data mart contains group-specific data.

8. Give reasons for partitioning.

Partitioning has many reasons: to assist in backup recovery, improve performance, or simplify management.

9. What are the functions of a warehouse manager?

A warehouse manager must perform referential integrity and consistency checks across the base data to provide business views, indexes, and partition views. The source data is merged and transformed into the temporary store, data is backed up into the data warehouse, and the data is archived at the end of the captured life.

10. Explain virtual data warehousing.

The information system strategy of virtual data warehousing is supportive of analytical decision-making. The virtual data warehouse provides an overall look at the completed data. Therefore, it has no historical data and can be considered a logical data model. It enables a semantic map on which the end user views it like a virtualized object.

Also Read: Architecture of Data Warehouse – A Comprehensive Guide

11. What do you understand by Hybrid SCD?

Both SCD1 and SCD2 together combine to give Hybrid SCD. Hybrid SCDs — these tables will be part of and have important data of some type 1 and some type 2 columns, and we need to track the change (historical data for some type 1 and some type 2 columns) to implement it for the tables where some columns are essential.

12. Define snapshot concerning data warehousing.

Perfect (complete) data visualization at extraction time is called a snapshot. It’s used to back up and restore data and uses less space. It involves thinking about what went on. They are stored in a report format after the catalogue is disconnected and generated shortly after.

13. What are some of the functions performed by OLAP?

A snapshot is the distribution of complete data visualization at extraction time. It is used to back up backup data and restore space. It is a process of knowing the performed activities. The catalogue is disconnected from the report, so it stores the data in a report format generated soon after it is disconnected.

14. What are some of the functions performed by OLAP?

The primary functions performed by OLAP are:

- Roll up

- Slice

- Dice

- Drill-down

- Pivot

15. What do you understand by ODS?

An ODS(Operational Data Store) is a database designed for data integration and subsequent operations on data present in multiple sources. With no data, a real-time operational data repository is sent back to the Operating System. It can be passed for reporting to the Data Warehouse.

16. What is meant by dimensional modelling?

Data warehousing is about modelling. It is the structure of data into storage conceptually. It talks about data objects and how they are related to rules in the software that manages the other data. Multidimensional modelling is also possible with the data warehouse.

17. Name three primary functions of dimensions.

The primary functions of dimensions are:

- Filtering: It chooses a smaller part of our data set for analysis. It is generally temporary.

- Labelling: tagging a group of samples is called labelling. It makes data queryable.

- Grouping: classifying data or small buckets.

18. Explain Galaxy Schema

Fast Constellation or Galaxy Schema consists of dimensional tables and two fact tables. And here, it can be known as the combination of stars.

19. Define three types of SCD.

There are three types of SCD (slowly changing dimension).

- SCD 1: This overwrites the current record with a new record.

- SCD 2: To an existing customer dimension table, it creates another dimension record.

- SCD 3: This creates a current value field for including new data.

20. What is dice operation, and how many dimensions are there?

In a dice operation, grouping is done into data based on a particular category. Two or more dimensions are used within this process.

21. Give the main benefit of normalization.

The normalization process is used to reduce the data redundancy. It was helpful to validate the data in a way that makes more sense for the user when required.

22. What is the Query manager responsible for?

The query manager manages all the user queries and operations. The query manager complexity is defined and analyzed based on end-user access. Anger is defined and evaluated based on end-user access. The query manager manages all the user-generated queries within the environment to extract data.

23. Explain Junk Dimension

A junk dimension is a single dimension to put a small dimension such as junk attributes. The junk dimension is a sub-dimension that takes junk attributes as a group of text attributes and flags, stirring them into a junk sub-dimension.

24. What is meant by VLDB?

A very large database (VLDB) is one terabyte database. Storage space with the most extensive file available and many database rows is used as the storage space. Many users use decision support applications and training process applications on this database.

25. What is meant by Snowflake Schema?

Snowflake Schema refers to a Snowflake schema, i.e., a first-dimensional table that is a primary interlinked table for other tables. In fact table, it can be joined.

26. What is the difference between a materialised view and a view?

A materialized view is a base table copy (picture or snapshot of the base table). It allows query results to be stored in a separate schema and gains indirect access to table data. A view is a virtual table that queries against, making it a replacement for tables and taking its output.

27. What is the difference between a materialized view and a view?

A materialized view is a physical copy, picture or snapshot of a base table. It enables query results to be retrieved from a separate schema (saving the table data) and provides indirect access to the table data. A view is equivalent to a virtual table but is used instead of the tables, taking the query’s output.

28. What do you understand by active data warehousing?

An active data warehouse is a data warehouse that can record transactions as they change and marry the transactions into the warehouse along with scheduled or batch cycle refreshers.

Also Read: Data Warehousing and Data Mining in Detail

29. Explain ETL.

ETL stands for Extract, Transform and Load, a software tool that can extract data from various sources, then transform data by adding, doing calculations, etc, and then load it to the Data Warehouse system. The inputs needed to execute this are active and include developers, testers, analysts, etc.

30. What are non-additive facts?

The Non-addictive facts are facts that are not summed up for any dimensions present in the fact tables. The same facts can be helpful if there are changes in the dimensions.

Conclusion

One must be very well-versed in preparing for a data warehouse interview as it covers basic understandings of data warehousing, including ETL, schema, and data modelling. Prepare to explain what convinces the star and snowflake schemas and the distinctions between OLAP and OLTP systems. Familiarity with common data warehouse applications and SQL and how to optimize its queries also proves useful, as well as knowledge about Amazon Redshift and Snowflake platforms. More emphasis on data governance, data quality, and performance tuning will increase your preparedness during the interview. To learn more about databases professionally, you can try the Accelerator Program in Business Analytics and Data Science by Hero Vired offered in collaboration with edX and Harvard University.

What are the key components of a data warehouse?

What are OLAP and OLTP, and how are they different?

What is a star schema?

What is a data mart, and how does it relate to a data warehouse?

What are the benefits of a data warehouse?

Updated on December 12, 2024